-

오른쪽 정렬왼쪽 정렬가운데 정렬

-

-

구분선 1구분선 2구분선 3구분선 4구분선 5구분선 6구분선 7구분선 8

-

- 삭제

mkdir /hadoop/temp

mkdir /hadoop/namenode_home

mkdir /hadoop/datanode_home

cd /hadoop

ls

temp와 namenode_home, datanode_home이 잘 만들어져 있습니다.

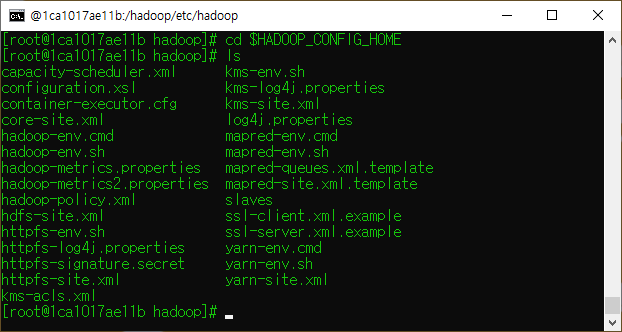

cd $HADOOP_CONFIG_HOME

ls

뭔가 파일이 엄청 많이 만들어져 있네요!

mapred-site.xml.template를 복사해서 mapred-site.xml을 만들려고합니다.

cp mapred-site.xml.template mapred-site.xml

mapred-site.xml.template이라는 파일을 mapred-site.xml 이라는 이름으로 복사하였습니다.

vim mapred-site.xml

vim core-site.xml

vim hdfs-site.xml

hadoop namenode -format

start-all.sh

yes

yes

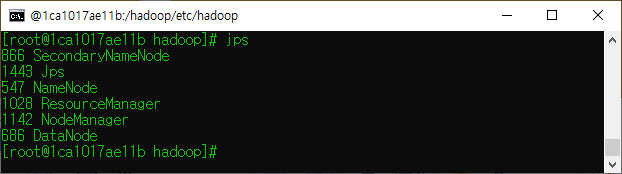

jpa

hadoop fs -mkdir -p /test

cd $HADOOP_HOME

hadoop fs -put LICENSE.txt /test

hadoop fs -ls /test

LICENSE파일을 test파일에 올리는 과정이었습니다.

hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.10.1.jar wordcount /test /test_out

hadoop fs -cat /test_out/*

단어의 갯수를 세어주는 역할입니다!

가상컴퓨터(컨테이너)가 Master이자 Slave였습니다.

rm -rf /hadoop/namenode_home

rm -rf /hadoop/datanode_home

mkdir /hadoop/namenode_home

mkdir /hadoop/datanode_home

cd $HADOOP_CONFIG_HOME

vim core-site.xml

localhost를 master로 바꾸어줍니다.

vim mapred-site.xml

exit

docker ps

docker stop hadoop

docker commit hadoop myhadoop

프로세스가 싱핼되고 있는 상태라면 docker stop hadoop으로 정지하고 실행되고 있지않으면 해당명령은 실행하지 않아도 됩니다.

hadoop으로 만들었던 컨테이너를 myhadoop이라는 이미지로 저장하기위한 명령으로 docker commit hadoop myhadoop을 사용하였습니다.

docker run -it -h master --name master -p 5070:50070 myhadoop외부포트가 5070이고 내부 포트가 50070입니다.

exit

docker ps

exit로 나가주시고 docker ps를 보면 master가 올라와있지 않습니다.

docker run -it -h slave1 --name slave1 --link master:master myhadoop

docker run -it -h slave2 --name slave2 --link master:master myhadoop

docker run -it -h slave3 --name slave3 --link master:master myhadoop

docker start master

docker start slave1

docker start slave2

docker start slave3

docker inspect master | find "IPAdrress"

mac의 경우 docker inspect master | grep IPAddress명령을 하면됩니다.

172.17.0.2는 master입니다.

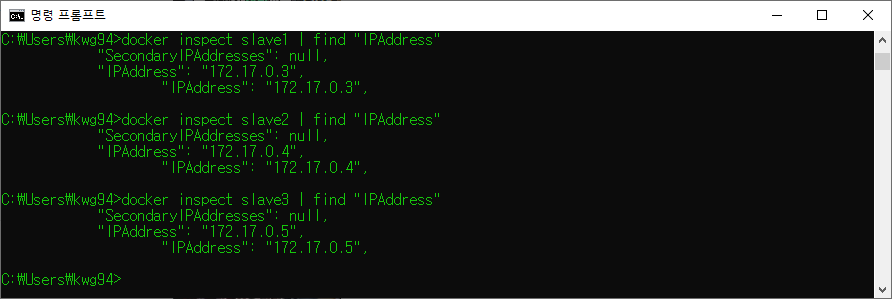

docker inspect slave1 | find "IPAdrress"

docker inspect slave2 | find "IPAdrress"

docker inspect slave3 | find "IPAdrress"

순서대로 1, 2, 3을 하나씩 넣어주면 IP주소가 하나씩 바뀌게됩니다.

172.17.0.3은 slave1 / 172.17.0.4는 slave2 / 172.17.0.5는 slave3 입니다.

docker exec -it master bash

vim /etc/host

vim으로 해당 파일을 열어보면 172.17.0.2로 master가 잡혀있네요.

slave도 추가해줄까요?

cd $HADOOP_CONFIG_HOME

ls

vim masters

masters를열면 비어있습니다. master를 써주세요.

vim slaves

slaves로 들어오면 localhost가 잡혀있는데 이것을 지우고 다른내용을 채울겁니다.

ssh slave1

yes

exit

ssh slave2

yes

exit

ssh slave3

yesy

exit

이렇게 하면 앞으로 계속 왔다갔다 할때 비밀번호입력을 묻지않습니다.

hadoop namenode -format

Y

hadoop datanode -format

start-dfs.sh

yes

start-yarn.sh

url에 localhost:5070을 입력하면 이런 화면이 나옵니다.

stop-dfs.sh

stop-yarn.sh

rm -rf /hadoop/namenode_home

rm -rf /hadoop/datanode_home

mkdir /hadoop/namenode_home

mkdir /hadoop/datanode_home

ssh slave1

exit

ssh slave2

exit

ssh slave3

exit

start-dfs.sh

start-yarn.sh

'빅데이터 > hadoop' 카테고리의 다른 글

| [hadoop] docker를 이용한 hadoop설치부터 기본 명령01( java설치 포함) (0) | 2022.07.02 |

|---|